This article is related to a current exhibition showcasing creative AI pieces at our RTL offices in Hamburg. The exhibit connected to this article is a text-to-image demonstration of the power that diffusion models bring to the field of artificial intelligence.

Ever since the dawn of humanity, creating artwork and writing stories has been the labor of love for people around the world. However, in today’s highly digital age, we’ve begun to delegate some of the creative legwork to machines. One such way of doing so is by the means of generative artificial intelligence models. Generative models work by learning the distribution of the provided data and predicting the probabilities. The generative model in this post is the diffusion model.

The term diffusion model borrows its name from the thermodynamic phenomenon bearing the same name. It is where particles naturally travel from areas of high density to areas of low density. When applied to data, this is demonstrated by the loss of information due to noise. Diffusion models learn the pattern of the systematic decay of its training data and by reversing the process, can recover said information. As such, it would be more accurate to name them reserve diffusion models, but that name doesn’t quite roll off the tongue does it.

Due to their revolutionary capabilities, many modern frameworks are beginning to implement diffusion models. Some examples of such are as follows:

Dall-E 2

OpenAI’s Dall-E 2 improves upon their first iteration of this model by utilizing the diffusion method. Ever since its debut in January 2022, Dall-E 2 has performed amazing feats such as creating completely original images from sentences, adding photorealistic objects into existing images and having the ability to create a varied collection of images from the same input. The following picture of mad scientist raccoons did not exist until the prompt was given to Dall-E 2. If you wish to try Dall-E out for yourself, their waitlist for access can be found here.

Prompt: Mad scientist Raccoons

Stable diffusion model

The newer Stable diffusion model which is created by StabilityAI has added upon the original diffusion method by using latent diffusion. This differs from the base method by working with a lower dimensional latent space instead of the image itself using an encoder and decoder. The encoder-decoder takes care of image details which allows the diffusion model to focus on the more important aspects such as semantics. As such, stable diffusion can perform the generation tasks faster and with less CPU overhead than the traditional diffusion model.

Midjourney

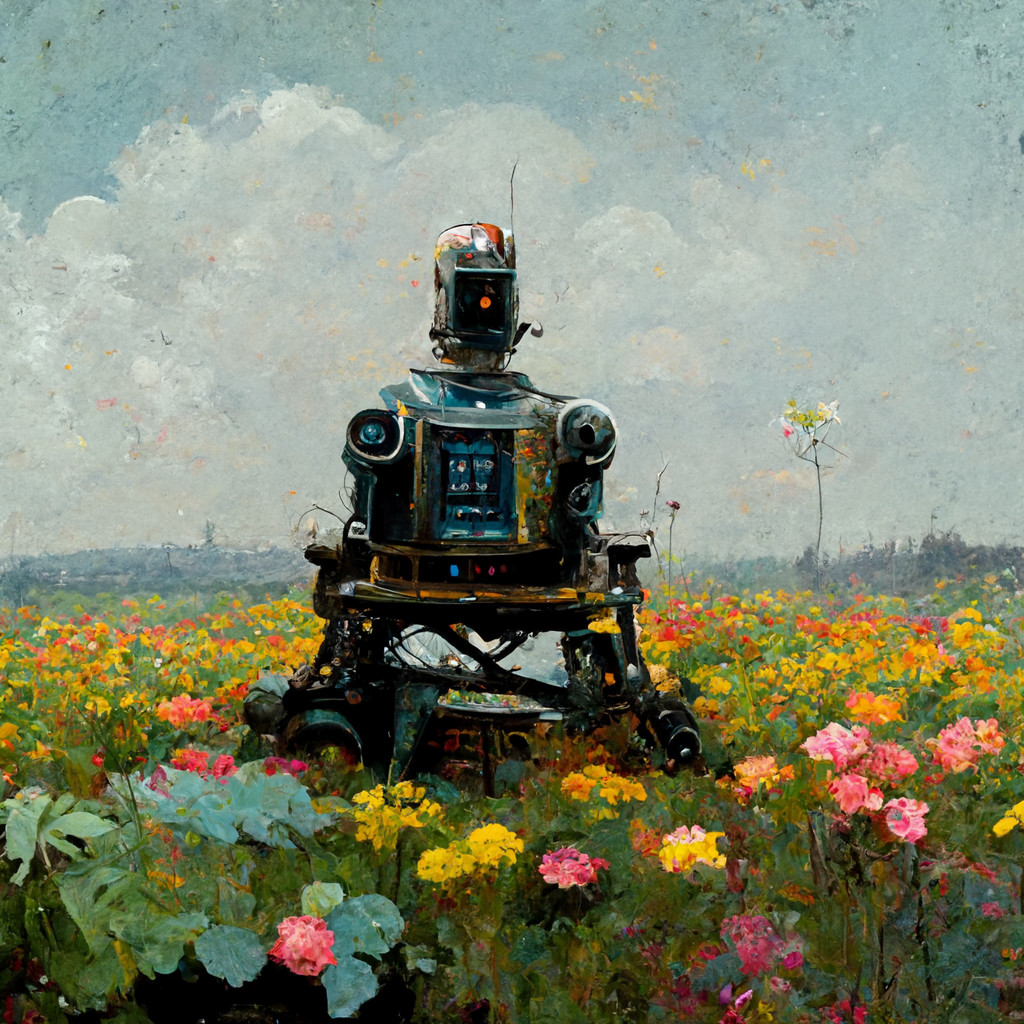

Both the name of the research lab and their model, Midjourney has implemented an application which uses the stable diffusion model to allow users to create images from text prompts where the user is able to define the style and other details of the produced image. Aside from the example shown below which I have generated, there are a wide variety of examples which can be found both on their website’s community showcase. You’re welcome to try it out with this link!

Prompt: An army of barbarian chihuahuas surrounding a castle which is defended by royal pugs, cinematic lighting, oil painting, wide view

Impact

With AI this capable, it may seem that artists would soon lose their jobs as many have feared during the age of automation in the last years (ie. Industrie 4.0). Despite these fears, the introduction of these models is less a replacement for the honest-to-goodness human artists out there, but rather a powerful tool at their fingertips to both draw inspiration from as well as guide the creative process. New types of “prompt artists” have also come from this invention. They turned the simple act of writing prompts into a skilled task of crafting the perfect textual input for pinpoint generation.

With all this in mind, the path ahead is that instead of fearing the AI takeover, we as humans should learn to better our own abilities fueled by the cutting-edge technology we’ve created.