Implementing Complex Information Flows with LangGraph in Multi-Agent LLM Systems

This section focuses on implementing complex information flows using LangGraph in multi-agent LLM systems. In Part 1, we discussed the usefulness of multi-agent systems and how to implement them with AutoGen.

LangChain is a widely used framework for developing LLM applications. It serves as basis for LangGraph and provides:

- A vast selection of pre-defined text extraction tools

- Language models

- Other resources

- A hierarchy of classes

Getting started with LangChain

Typically, LangChain and LangGraph are based on the concept of chains: A PromptTemplate is usually combined with an LLM and, optionally, a validator. The simplest way to achieve this is by using the LCEL (LangChain Expression Language). Although it may take some time to get used to, it allows for concise and standardised code.

from langchain.output_parsers.boolean import BooleanOutputParser

from langchain.prompts import PromptTemplate

from langchain_openai import AzureChatOpenAI

prompt = PromptTemplate.from_template(

"""Decide if the user question got sufficiently answered within the chat history. Answer only with YES or NO!

Sentences like "I don't know" or "There are no information" are no sufficient answers.

chat history: {messages}

user question: {user_question}

"""

)

llm = AzureChatOpenAI(

openai_api_version="2023-12-01-preview",

azure_deployment="gpt-35-turbo",

streaming=True

)

parser = BooleanOutputParser()

chain = prompt | llm | parser

# Zum Aufruf der Chain müssen alle Prompt-Variablen übergeben werden

chain.invoke({

"user_question": "Was sind die aktuellen Trends in Italien?",

"messages": ["die aktuellen Trends Italiens sind heute Formel 1 und ChatGPT"],

}) # Returns TrueEmpowering Agents through Function Calling

LangChain supports the execution of functions or tools. To execute a function, it must first be converted into a LangChain tool, which can be done:

- Explicitly

- Via function annotation

The latter holds the advantage, that converting the function's docstrings into language model information avoids redundancies and is very straight-forward, as shown in the following example:

from pytrends.request import TrendReq

def get_google_trends(country_name='germany', num_trends=5):

"""

Fetches the current top trending searches for a given country from Google Trends.

Parameters:

- country_name (str): The english name of the country written in lower letters

- num_trends (int): Number of top trends to fetch. Defaults to 5.

Returns:

- Prints the top trending searches.

"""

pytrends = TrendReq(hl='en-US', tz=360)

try:

trending_searches_df = pytrends.trending_searches(pn=country_name)

top_trends = trending_searches_df.head(num_trends)[0].to_list()

return top_trends

except Exception as e:

print(f"An error occurred: {e}")

from langchain.tools import StructuredTool

google_trends_tool = StructuredTool.from_function(get_google_trends)Once created, the tool should be passed to the model. If ChatGPT is used, the model supports native function calling. Therefore, the tool only needs to be activated using the bind_functions call. Now, the model can trigger a corresponding function call if necessary.

However, to execute the function automatically and return the results to the model, the chain must be transferred to an agent. For this purpose, there is a separate class that requires:

- A name

- The necessary tools to execute the chain

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain.agents import create_openai_functions_agent

from langchain_core.utils.function_calling import convert_to_openai_function

from langchain_core.runnables import RunnablePassthrough

from langchain.agents.output_parsers.openai_functions import OpenAIFunctionsAgentOutputParser

from langchain.agents import AgentExecutor

from langchain_core.messages import HumanMessage

from langchain.agents.format_scratchpad.openai_functions import (

format_to_openai_function_messages,

)

from langchain_community.tools.tavily_search import TavilySearchResults

tavily_tool = TavilySearchResults(max_results=5)

tools = [google_trends_tool, tavily_tool]

system_prompt = "\\nYou task is to get information on the current trends by using your tools."

prompt = ChatPromptTemplate.from_messages([

("system", system_prompt),

MessagesPlaceholder(variable_name="messages"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

])

agent = create_openai_functions_agent(llm, tools, prompt)

llm_with_tools = llm.bind(functions=[convert_to_openai_function(t) for t in tools])

agent = (

RunnablePassthrough.assign(

agent_scratchpad=lambda x: format_to_openai_function_messages(

x["intermediate_steps"]

)

)

| prompt

| llm_with_tools

| OpenAIFunctionsAgentOutputParser()

)

executor = AgentExecutor(name="Analyzer", agent=agent, tools=tools)

Extending Single LangChain Agents to Multi Agents in LangGraph

LangChain version 0.1 introduced LangGraph; a concept for implementing multi-agent systems. LangGraph organizes communication through a graph instead of a free exchange of messages and shared chat history.

The interface is based on the NetworkX Python library, allowing for flexible composition of directed graphs, which may also be cyclical.

- First, a graph with a defined status is created.

- Then, nodes and edges are added to the graph, and a starting point is selected.

- The graph's dynamics are determined by either static or conditional edges.

- Both nodes and conditional edges can be simple Python functions or determined using an LLM call.

- These functions receive the current state and return a new state for the next node.

- Finally, all nodes and edges are compiled into a

'Pregel'object.

The Pregel graph implements the LangChain Runnable Interface. It can be executed:

- Synchronously or asynchronously

- As a stream or batch operation

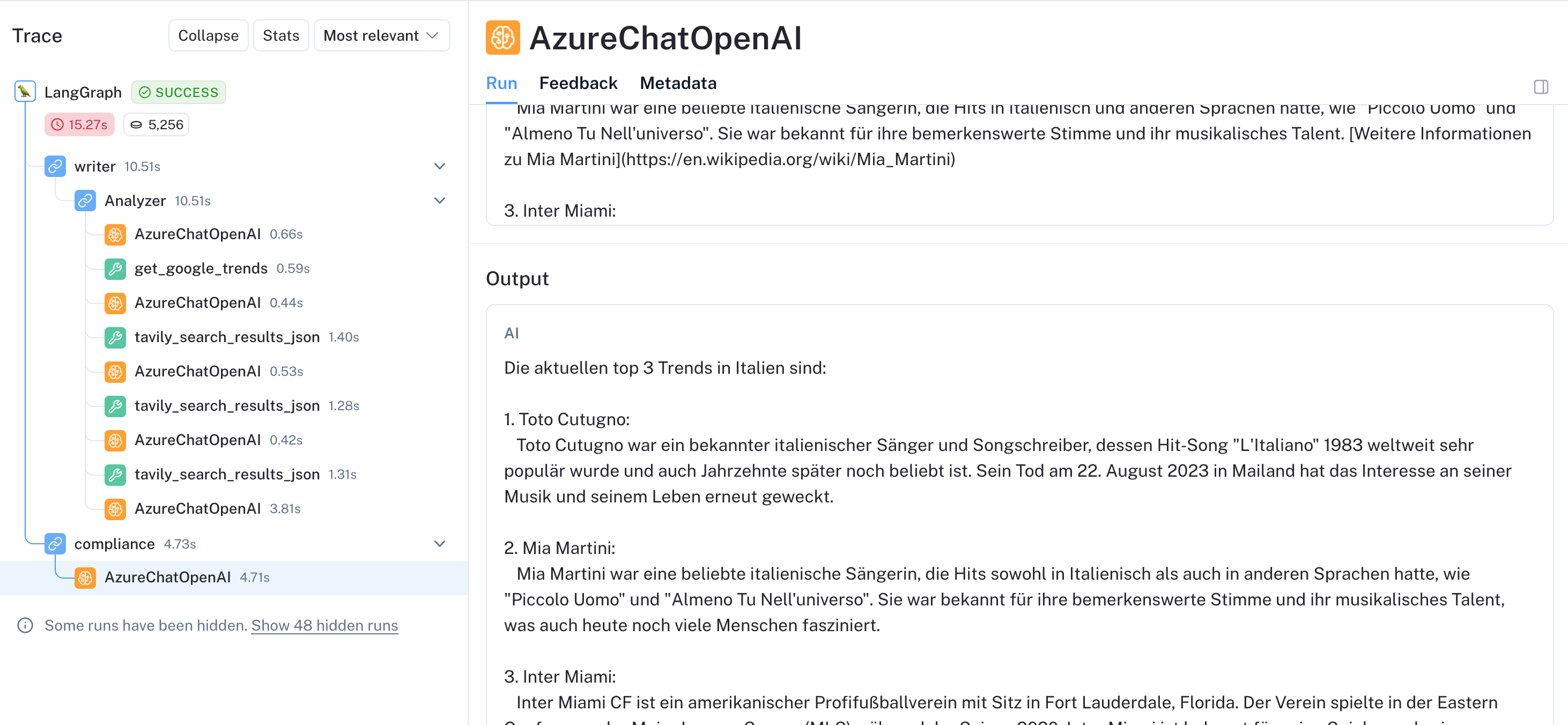

Monitoring of Agents through LangSmith

LangChain aims to hide complexity from the user, which can make debugging more difficult. LangSmith, the monitoring solution developed by LangChain, is helpful in this regard. The integration requires an API key only.

After obtaining the key, all events are streamed to the cloud and displayed in a user-friendly WebUI. This provides quick insight into all executed operations, such as:

- LLM API calls

- Executed tools

- Errors that have occurred

The system tracks:

- Execution times

- Generated tokens and their costs

- Numerous system meta-information

Additionally, custom key-value pairs can be logged.

To have more control or to avoid using LangSmith, you can include your own callback in the workflow to handle events that occur within it. Callbacks are also useful for integrating your own user interface.

The implementation can be complex, as there are 14 different event types that are relevant for:

- Start of various actions

- End of various actions

- Errors of various actions

Unfortunately, we couldn't extract all the desired information in every event. In some cases, we had to resort to linked parent events.

To address this issue, we developed a graph callback that can also help visualize the complexity of the hidden calls within aLangGraph call.Comparison between LangGraph and AutoGen

| AutoGen | LangGraph | |

| Projectstatus | AutoGen is a popular framework for multi-agent systems. Microsoft is driving the project, which is based on a scientific paper. | In January 2024, the multi-agent solution for the popular LLM framework was released. It combines initial experience from AutoGen with approaches from established open source projects such as NetworkX and Pregel. LangGraph will continue to be a component of the LangChain ecosystem. |

| Function Calling | Autogen uses an user proxy agent to execute all functions, either natively or within a Docker container for added security. | LangChain allows for the transformation of functions and agents into executables, simplifying the structure. However, this feature does not currently provide any additional virtualization. |

| Messageflow | Autogen uses an user proxy agent to execute all functions, either natively or within a Docker container for added security. | The communication is represented by a graph. This makes it easy and intuitive to map specific communication paths. Conditional edges can also be used to map open group conversations between agents. |

| Usability | AutoGen makes it easy to use multiple agents with its examples and AutoGen Studio. However, modifying more than just prompts and tools requires extending the actual agent classes, which can make upgrades and maintenance more challenging. | LangChain is a powerful framework that tries to hide complexity from the user, but requires the user to learn many framework peculiarities. The high level of abstraction is often a barrier, especially at the beginning. However, once a user has understood the specifics of LangChain, using LangGraph is easy and intuitive. |

| Maturity | Autogen is a good starting point for multi-agent projects. However, it may be challenging to implement productive use cases due to unreliable group conversations and a lack of monitoring support. However, it may be challenging to implement productive use cases due to unreliable group conversations and a lack of monitoring support. However, it may be challenging to implement productive use cases due to unreliable group conversations and a lack of monitoring support. The shared chat history of the agents can cause executed prompts to become lengthy, resulting in slow and costly processes. | LangGraph is a young software with a solid foundation. The LangChain ecosystem provides various output parsers and error management options. LangGraph allows for precise control over the availability of information for each node and can flexibly map business requirements for communication flow. Additionally, it is supported by its serving and monitoring infrastructure. |

Conclusion

Multi-agent systems are useful for building complex autonomous or semi-autonomous systems. They allow for the definition of a specific agent for each subtask, including:

- Prompting

- Model selection

- Configuration

AutoGen has made a valuable contribution to multi-agent systems and is well-suited for initial experiments. However, if you require more precise control over agent communication or need to build a productive application, LangGraph is a better choice.

We recently converted an application from AutoGen to LangGraph:

- The implementation of the agents and tools was relatively simple.

- However, the biggest effort was migrating the UI connection to map all necessary information regarding tool and LLM usage via LangGraph callback.

- Contrary, the deployment through LangServe is very straight forward.

Additionally, neither framework natively supports parallel execution of agents if the results are to be merged afterwards.

LangGraph and LangSmith can be used to create and operate complex workflows involving LLMs. They offer a range of possibilities for managing and processing language data.