- Introduction

Large Language Models (LLMs) like GPT-4 have marked a transformative era in artificial intelligence. They are capable of producing human-like language, comprehending complex information, and completing tasks that previously required human intelligence. GPT-4, the engine behind ChatGPT, passed the simulated bar exam in the top 10% of test-takers and quickly gained widespread adoption across business, healthcare, and creative industries. ChatGPT itself reached over 100 million users within two months, making it the fastest-growing consumer application in history.

But still, for all their impressive capabilities, LLMs have inherent limitations. They operate in a vacuum, largely disconnected from real-time data, environmental inputs, and evolving user context. Their responses are confined to the static data they were trained on and the immediate input provided by the user. As a result, they may hallucinate facts, fail to adapt to ongoing conversations, and misunderstand intent due to lack of situational awareness. These constraints call for the next step in AI’s evolution: Multi-Context Protocol (MCP).

Initially introduced by Anthropic in 2024 as an open-source standard, MCP is a system, that 'provides a universal, open standard for connecting AI systems with data sources, replacing fragmented integrations with a single protocol.The result is a simpler, more reliable way to give AI systems access to the data they need.'

This includes not only language but also images, sounds, environmental signals, historical records, and real-time updates. By integrating such diverse information sources, MCP aims to create a new generation of AI—one that mirrors human decision-making more closely and delivers more grounded, useful, and adaptable outputs.

- What is MCP?

MCP is an open protocol that standardizes how applications provide context to large language models (LLMs). It enables seamless integration between LLM-based applications and external data sources or tools. Where traditional LLMs take in text and output text, MCP-enabled systems process an array of input modalities: written language, visual cues, audio signals, sensory data, user history, and dynamic real-world information.

Before MCP, integrating AI models with external data required custom code or specialized plugins for each data source, leading to complex and fragile systems. MCP streamlines this by offering a structured approach that enhances flexibility and interoperability, allowing AI agents to access the necessary context efficiently.

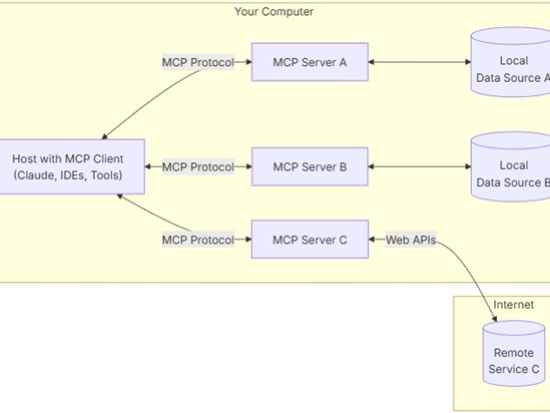

Technically, MCP operates on a client-server architecture, facilitating seamless communication between AI applications and various data repositories. The key components of MCP are:

MCP Clients: These are AI applications, such as language models, that require access to external data. They initiate requests to MCP servers to retrieve necessary information or perform specific actions.

MCP Servers: These servers act as intermediaries between the AI applications and the external data sources. They handle requests from MCP clients, interact with the appropriate data repositories or tools, and return the required information in a standardized format.

Local Data Sources: Files, databases, and services on your computer that MCP servers can securely access.

Remote Services: External systems accessible over the internet (e.g., via APIs) that MCP servers can connect to.

Communication Protocol: MCP standardizes the interaction between clients and servers, ensuring that AI models can access and utilize external data efficiently. This standardization eliminates the need for custom integrations for each data source, simplifying the development process and enhancing scalability.

--> So think of MCP as the 'USB-C for AI applications'.

Figure 1: MCP Architecture

Model Context Protocol provides a framework to connect AI agents with persistent memory and external data feeds, allowing for adaptive, context-aware interaction. These implementations showcase how MCP is shifting AI away from static, one-shot interactions toward continuous, richly-informed conversations and decisions.

- Advantages and Use Cases

The integration of multiple context streams provides MCP with a series of advantages over traditional LLMs. One of the most significant benefits is improved accuracy. When an AI model can cross-reference visual evidence, retrieve real-time facts, and draw on a user’s past preferences, it reduces the risk of hallucination and increases the relevance of its responses. Moreover, this enriched context enables the AI to understand the nuances of a situation—something LLMs often miss.

Consider a healthcare setting. An MCP-enabled assistant could simultaneously analyze a patient’s medical history, interpret an X-ray image, process live sensor data, and consult the latest research to suggest a potential diagnosis. This holistic view enables a much more informed decision than what a text-only model could offer. Likewise, in a smart home, MCP could manage temperature and lighting by integrating environmental sensor data, user schedules, and weather forecasts.

In the enterprise world, MCP transforms digital assistants into true collaborators. Imagine a virtual assistant that not only listens to a business meeting but also analyzes visual slides, accesses relevant project documents, and identifies action items based on participants’ speech and prior correspondence. Content creation also benefits significantly: a marketing team might provide a campaign brief, brand imagery, and social media trends. An MCP system could combine all this to generate consistent, targeted multimedia content.

This multi-domain applicability underscores MCP’s versatility. It breaks down the silos between tasks and data types, enabling one AI system to perform a range of functions that previously required multiple separate tools. This is a crucial step toward the vision of a universal assistant—an AI that can help users across domains, from writing code and interpreting documents to identifying objects and analyzing data.

- Challenges and Criticism

While MCP has huge potential, it also introduces several new challenges. First and foremost is the issue of computational complexity. OpenAI notes that as models expand in scope and context windows, the demand for specialized hardware and optimized infrastructure increases significantly. This can lead to increased costs, longer response times, and higher barriers to adoption.

Moreover, the integration of various data sources, many of which are personal or sensitive, amplifies privacy and security concerns. An MCP system that accesses audio feeds, camera input, personal calendars, and chat histories must be held to high standards of data governance. There’s a real risk of misuse if such systems are not transparently managed or if access controls are weak .

Another concern lies in bias and fairness. Each modality—text, images, audio—can introduce its own biases. When combined, these biases may be amplified, leading to unfair outcomes. A survey from June 2024 examines fairness and bias in LMMs, highlighting the challenges and proposing mitigation strategies. Without proper auditing tools and inclusive training data, MCP systems may reinforce existing inequalities.

Perhaps most critically, MCP models tend to operate as black boxes. The complex interplay between different context inputs makes it difficult to explain why a system made a particular decision. In high-stakes areas like healthcare, law, or finance, this lack of interpretability can limit trust and adoption. Addressing this will require the development of new transparency tools that help users and developers trace how context was used and weighed.

- Relevance for Stakeholders

The rise of MCP has implications for various audiences. For AI developers and engineers, it opens up a frontier of innovation. By building MCP-compliant architectures, developers can create AI applications that are more adaptive, modular, and integrated with external data. Frameworks like Anthropic’s MCP make it easier to connect AI agents to tools and context sources, enabling plug-and-play multimodal functionality.

For business leaders and decision-makers, MCP promises strategic value. As AI becomes embedded in customer service, analytics, and operations, the ability to deliver hyper-personalized and context-aware experiences becomes a competitive differentiator. Gartner predicts that by 2026, search engine usage will decline by 25%, as users increasingly rely on conversational, context-sensitive AI for information and services. Businesses that adopt MCP can respond to this shift by offering more intuitive, responsive digital interfaces.

For general users, MCP-enhanced systems offer more natural and effective interactions. An AI assistant that can “see” what a user sees, remember previous conversations, and draw on external data sources can provide much more useful help. Whether it’s recommending dinner based on a fridge photo, or offering travel tips that consider a user’s past preferences and current location, the impact is tangible. However, users will also need to become more aware of the implications for data sharing and system oversight. The potential for MCP to enrich lives is vast—but only if implemented with user trust and ethical transparency at the forefront.

- Conclusion

MCP represents a critical turning point in the development of AI. Building on the powerful foundation of LLMs, MCP extends the boundaries of what AI can perceive, process, and do. By synthesizing diverse forms of context—linguistic, visual, auditory, environmental, historical—it allows AI systems to become more capable, grounded, and human-like in their interactions.

Already, AI companies are demonstrating MCP’s potential, as these tools are moving beyond chatbots to become fully-contextual digital agents capable of assisting with complex, real-world tasks. The transition to MCP will require addressing serious technical and ethical challenges, from computational costs and bias to privacy and explainability.

In the coming years, MCP may well become the backbone of next-generation AI systems. Its relevance spans industries and user types, promising better decision support, richer user experiences, and a more seamless integration of AI into daily life. Like the shift from mobile-first to AI-first computing, the MCP paradigm may redefine how we engage with technology—and how it understands us.

Further Readings: